A Markov chain describes the sequence of events and probability of happening each event depends only on the previous event.

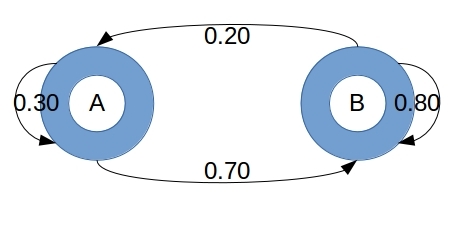

In figure-1, you can see two states Markov chain. In which there are two states A and B. The probability of changing state from A to B is 0.70 and from A to A is 0.30. Further, the probability of changing state from B to A is 0.20 and from B to B is 0.80.